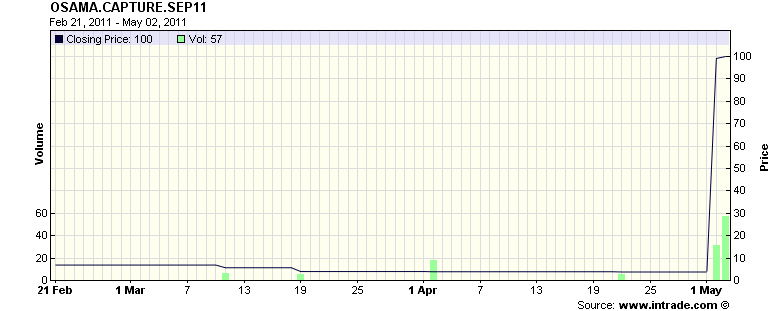

How do we know, now, that Intrade’-s market price was not an accurate estimate of the probability bin Laden was killed or captured by September 2011? Is an prior estimate of 50 percent likelihood that a tossed coin will come up heads wrong if the coin comes up as “-100 percent”- heads (and not half-heads and half-tails)?

I’-m not buying Chris’-s implied definition of success and failure.

However, one might ask Robin Hanson about what the Intrade market’-s performance implies about the usefulness of his Policy Analysis Market idea.

Note that I was contrasting the InTrade-Bin-Laden failure with the high expectations set by Robin Hanson, Justin Wolfers and James Surowiecki.

Also, other than statisticians, most people don’-t have a probabilistic approach of InTrade’-s predictions. That’-s the big misunderstanding, which is one part of the big fail of the prediction markets.